AI (ARTIFICAL INTELLIGANCE)

What are AI Regulatory Frameworks?

AI Regulatory Frameworks are sets of laws, guidelines, and standards developed by governments, international bodies, and organizations to ensure the ethical, safe, and responsible development and deployment of artificial intelligence technologies.

Why Are They Needed?

- Rapid AI Growth: AI is advancing quickly, impacting sectors like healthcare, finance, transportation, and more.

- Ethical Concerns: Issues like bias, discrimination, privacy invasion, and lack of transparency need to be addressed.

- Safety and Security: Preventing harm from malfunctioning AI or malicious use is critical.

- Accountability: Defining who is responsible for AI decisions and outcomes.

Core Principles Often Included:

- Transparency: AI systems should be explainable and understandable.

- Fairness and Non-Discrimination: Avoiding biases that harm individuals or groups.

- Privacy Protection: Safeguarding personal data used by AI.

- Safety and Robustness: Ensuring AI systems perform reliably and safely.

- Accountability: Clear responsibility for AI design, deployment, and outcomes.

Key Frameworks and Initiatives:

- European Union’s AI Act: One of the most comprehensive AI regulations, categorizing AI systems by risk and imposing strict requirements on high-risk applications.

- OECD AI Principles: International guidelines promoting trustworthy AI, endorsed by 42 countries.

- US AI Initiatives: Guidelines focusing on innovation balanced with risk management, with agencies like NIST working on AI standards.

- UNESCO’s Recommendation on the Ethics of AI: Promotes global cooperation on ethical AI development.

Implementation Tools:

- Auditing and Certification: Independent checks to ensure AI systems comply with laws and standards.

- Impact Assessments: Evaluations before deployment to assess risks and harms.

- AI Ethics Boards: Organizations set up within companies and governments to oversee AI projects.

Challenges:

- Global Coordination: AI development is global, but regulations vary by country.

- Keeping Pace: Laws must evolve alongside rapidly changing technology.

- Balancing Innovation and Control: Regulations should protect society without stifling innovation.

- Enforcement: Ensuring compliance without overly burdensome bureaucracy.

The Future:

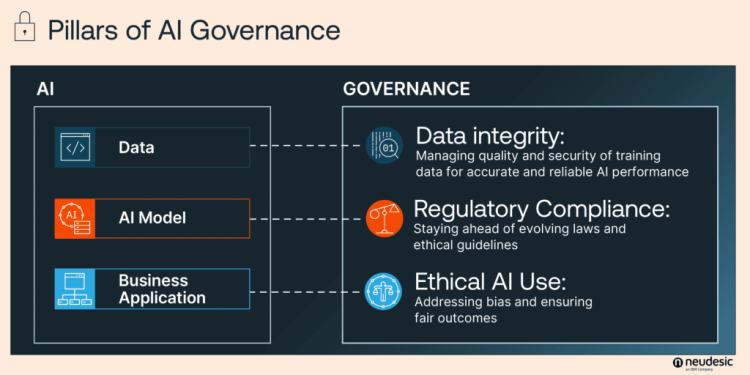

- Growing focus on “AI governance”—the broader system of policies, ethics, and societal impacts.

- Increasing public participation in AI policymaking.

- Development of more nuanced, sector-specific regulations.

AI Regulatory Frameworks are crucial to building public trust and ensuring AI technologies benefit society fairly and safely. Want me to break down specific laws or explore how companies implement compliance strategies? Just let me know! ⚖️🤖Tools